NodeJs: Difference between revisions

| (32 intermediate revisions by the same user not shown) | |||

| Line 645: | Line 645: | ||

Goto https://node.green/ for feature list and when | Goto https://node.green/ for feature list and when | ||

==NVM== | ==NVM== | ||

We can deal with Node version using nvm. This allows you to switch between versions of Node. To set the default we can type | ===Useful Commands=== | ||

We can deal with Node version using nvm. This allows you to switch between versions of Node. | |||

<br> | |||

To show current | |||

<syntaxhighlight lang="bash"> | |||

nvm current | |||

</syntaxhighlight> | |||

To show installed | |||

<syntaxhighlight lang="bash"> | |||

nvm ls | |||

</syntaxhighlight> | |||

To set the default we can type | |||

<syntaxhighlight lang="bash"> | <syntaxhighlight lang="bash"> | ||

nvm alias default | nvm alias default 20.0.0 | ||

</syntaxhighlight> | </syntaxhighlight> | ||

===Issues=== | |||

For me this did not work because I have a directory in ${HOME}/node_modules. Renaming/Removing this made it work again. I also have a file (which does not fix this but thought was use setnode.sh | |||

<syntaxhighlight lang="bash"> | |||

#!/usr/bin/bash | |||

# initialize nvm | |||

export NVM_DIR="$HOME/.nvm" | |||

[ -s "$NVM_DIR/nvm.sh" ] && \. "$NVM_DIR/nvm.sh" # This loads nvm | |||

DEFAULT_NVM_VERSION=20 | |||

nvm cache clear | |||

nvm install $DEFAULT_NVM_VERSION | |||

nvm alias default $DEFAULT_NVM_VERSION | |||

NVERS=$(nvm ls --no-alias | grep -v -- "->" | grep -o "v[0-9.]*") | |||

while read ver; do nvm uninstall $ver; done <<< $NVERS | |||

while read ver; do nvm install $ver; done <<< $NVERS | |||

nvm use $DEFAULT_NVM_VERSION | |||

</syntaxhighlight> | |||

==Starting a Project== | ==Starting a Project== | ||

To start a project type. This creates a package.json. | To start a project type. This creates a package.json. | ||

| Line 946: | Line 976: | ||

npm i express-session | npm i express-session | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== | ===Express Session=== | ||

We need to make sure that our sessions are secure therefore we need to be careful when creating and storing information in a session. | |||

<syntaxhighlight lang="js"> | |||

app.use(session({ | |||

secret: ['veryimportantsecret','notsoimportantsecret','highlyprobablysecret'], | |||

name: "secretname", // this helps reduce risk for fingerprinting | |||

cookie: { | |||

httpOnly: true, // do not let browser javascript access cookie ever | |||

secure: true, // only use cookie over https | |||

sameSite: true, // true blocks CORS requests on cookies. | |||

maxAge: 600000, // time is in miliseconds i.e. 600 seconds | |||

ephemeral: true, // delete this cookie while browser close | |||

} | |||

})) | |||

</syntaxhighlight> | |||

===Express Setup=== | |||

Some these will of course exist but the complete list is for ordering purposes. The main one is the passport config where we are going to store our passport processing. | |||

<syntaxhighlight lang="js"> | |||

const express = require('express'); | |||

const cookieParser = require('cookie-parser'); | |||

const session = require('express-session'); | |||

const morgan = require('morgan'); | |||

const path = require('path'); | |||

const nav = { | |||

nav: [{ link: '/books', title: 'Books' }, | |||

{ link: '/authors', title: 'Authors' }], | |||

title: 'Library', | |||

}; | |||

const homeRouter = require('../routes/homeRouter')(nav); | |||

const bookRouter = require('../routes/bookRouter')(nav); | |||

const adminRouter = require('../routes/adminRouter')(nav); | |||

const authRouter = require('../routes/authRouter')(); | |||

function expressApp(baseDirectory) { | |||

// Instance of express | |||

const expressInstance = express(); | |||

// Needed to read body data | |||

expressInstance.use(express.json()); | |||

// Needed to cookies | |||

expressInstance.use(cookieParser()); | |||

// Needed to cookies | |||

expressInstance.use(session({ | |||

secret: 'keyboard cat', | |||

resave: true, | |||

saveUninitialized: true, | |||

})); | |||

require('./passport/passportConfig')(expressInstance); | |||

// Support URL encoded | |||

expressInstance.use(express.urlencoded({ extended: true })); | |||

expressInstance.use(morgan('tiny')); | |||

expressInstance.use(express.static(path.join(baseDirectory, '/public/'))); | |||

expressInstance.use('/css', express.static(path.join(baseDirectory, '/node_modules/bootstrap/dist/css'))); | |||

expressInstance.use('/js', express.static(path.join(baseDirectory, '/node_modules/bootstrap/dist/js'))); | |||

expressInstance.use('/js', express.static(path.join(baseDirectory, '/node_modules/jquery/dist'))); | |||

expressInstance.set('views', './src/views'); | |||

expressInstance.set('view engine', 'ejs'); | |||

expressInstance.use('/books', bookRouter); | |||

expressInstance.use('/admin', adminRouter); | |||

expressInstance.use('/auth', authRouter); | |||

expressInstance.use('/', homeRouter); | |||

return expressInstance; | |||

} | |||

module.exports = (baseDirectory) => expressApp(baseDirectory); | |||

</syntaxhighlight> | |||

===Passport Config=== | |||

This is where the passport framework elements live and we keep them in config/passport/passport.js. This could define what our user will look like once authenticated. '''Note the export with the parameter''' | |||

<syntaxhighlight lang="js"> | |||

const passport = require('passport'); | |||

const debug = require('debug')('app:passport'); | |||

require('./strategies/local.strategy')(); | |||

function passportConfig(app) { | |||

app.use(passport.initialize()); | |||

app.use(passport.session()); | |||

// Store the user | |||

passport.serializeUser((user, done) => { | |||

done(null, user); | |||

}); | |||

// Retrieve the user | |||

passport.deserializeUser((user, done) => { | |||

done(null, user); | |||

}); | |||

} | |||

module.exports = (app) => passportConfig(app); | |||

</syntaxhighlight> | |||

===Passport Strategy=== | |||

This is where we get to write how it works in terms of authentication. Valid or not valid. | |||

<syntaxhighlight lang="js"> | <syntaxhighlight lang="js"> | ||

const passport = require('passport'); | |||

const { Strategy } = require('passport-local'); | |||

// | const mongoRepository = require('../../../repository/mongoRepository'); | ||

// | function localStrategy() { | ||

const repository = mongoRepository(); | |||

// Strategy takes an definition for where to get user name and | |||

// A function which will provide these | |||

passport.use(new Strategy( | |||

{ | |||

usernameField: 'username', | |||

passwordField: 'password', | |||

}, | |||

(username, password, done) => { | |||

repository.getUser(username).then((user) => { | |||

if (user.password === password) { | |||

done(null, user); | |||

} else { | |||

done(null, false); | |||

} | |||

}); | |||

}, | |||

)); | |||

} | |||

module.exports = () => localStrategy(); | |||

</syntaxhighlight> | </syntaxhighlight> | ||

===Router=== | |||

It is the passport.authenticate which is important. | |||

<syntaxhighlight lang="js"> | |||

=== | const express = require('express'); | ||

const passport = require('passport'); | |||

const authController = require('../controllers/authController'); | |||

function authRouter() { | |||

const router = express.Router(); | |||

const controller = authController(); | |||

router.post('/signUp', controller.performSignUp); | |||

router.post('/signIn', passport.authenticate('local', { | |||

successRedirect: '/auth/profile', | |||

failureRedirect: '/', | |||

})); | |||

router.get('/profile', controller.performProfile); | |||

return router; | |||

} | |||

module.exports = authRouter; | |||

</syntaxhighlight> | |||

===Controller=== | |||

Nothing special but here for reference | |||

<syntaxhighlight lang="js"> | <syntaxhighlight lang="js"> | ||

const | const debug = require('debug')('app:authController'); | ||

const mongoRepository = require('../repository/mongoRepository'); | |||

function authController() { | |||

const repository = mongoRepository(); | |||

const | async function performSignUp(req, res) { | ||

const | const { username, password } = req.body; | ||

const user = await repository.createUser(username, password); | |||

req.login(user, () => { | |||

res.redirect('/auth/profile'); | |||

}); | |||

} | |||

async function performProfile(req, res) { | |||

debug('Doing profile'); | |||

res.json(req.user); | |||

} | |||

return { | |||

performSignUp, performProfile, | |||

}; | |||

} | |||

}); | module.exports = authController; | ||

</syntaxhighlight> | |||

===Guarding Routes=== | |||

We can use middleware to check for the user. If it is not there then computer says no. | |||

<syntaxhighlight lang="js"> | |||

router.use('/', async (req, res, next) => { | |||

if (req.user) { | |||

return next(); | |||

} | |||

return res.redirect('/'); | |||

}); | |||

</syntaxhighlight> | </syntaxhighlight> | ||

==Databases== | ==Databases== | ||

===MS SQL Example=== | ===MS SQL Example=== | ||

Instruction for MS SQL can be found [[ | Instruction for MS SQL can be found [[MS SQL Setup]] Nothing too shocking here. | ||

====Connect to DB==== | |||

<syntaxhighlight lang="js"> | <syntaxhighlight lang="js"> | ||

const sql = require('mssql'); | const sql = require('mssql'); | ||

| Line 1,008: | Line 1,205: | ||

sql.connect(config).catch(debug((err) => debug(err))); | sql.connect(config).catch(debug((err) => debug(err))); | ||

</syntaxhighlight> | </syntaxhighlight> | ||

====Perform a Query==== | |||

This is asynchronous so we will need to wait for our results when we use the connection. Here is an example of that. | This is asynchronous so we will need to wait for our results when we use the connection. Here is an example of that. | ||

<syntaxhighlight lang="js"> | <syntaxhighlight lang="js"> | ||

| Line 1,024: | Line 1,222: | ||

); | ); | ||

</syntaxhighlight> | </syntaxhighlight> | ||

====Perform a Query with Parameters==== | |||

We can use parameters with this syntax | We can use parameters with this syntax | ||

<syntaxhighlight lang="js"> | <syntaxhighlight lang="js"> | ||

| Line 1,031: | Line 1,230: | ||

.query('select * from books where id = @id'); | .query('select * from books where id = @id'); | ||

</syntaxhighlight> | </syntaxhighlight> | ||

===Mongo DB=== | ===Mongo DB=== | ||

Here we are going to use mongo db to achieve the same goal as MS SQL. With this we will be using mongodb which is a schemaless approach unlike mongoose. | Here we are going to use mongo db to achieve the same goal as MS SQL. With this we will be using mongodb which is a schemaless approach unlike mongoose. | ||

====Connect to DB==== | |||

This connects to the DB but also inserts the records into the database based off an object books which is not defined here. | |||

<syntaxhighlight lang="js"> | <syntaxhighlight lang="js"> | ||

const { MongoClient } = require('mongodb'); | const { MongoClient } = require('mongodb'); | ||

| Line 1,051: | Line 1,253: | ||

db.books.find().pretty() | db.books.find().pretty() | ||

</syntaxhighlight> | </syntaxhighlight> | ||

====Reading All or one==== | |||

Clearly we need to pass the connection around and some error handling on failure. | |||

<syntaxhighlight lang="js"> | |||

const { ObjectId, MongoClient } = require('mongodb'); | |||

const url = 'mongodb://localhost:27017'; | |||

const dbName = 'libraryApp'; | |||

const client = await MongoClient.connect(url); | |||

const db = client.db(dbName); | |||

const collection = await db.collection('books'); | |||

// For all | |||

const books = await collection.find().toArray(); | |||

// And for one | |||

const book = await collection.findOne({_id: new ObjectID(id) | |||

client.close(); | |||

}); | |||

</syntaxhighlight> | |||

==External Service Example== | |||

This is an example of calling a service in nodejs. With this service we receive the data with axios and in the format of xml. So as ever lets install | |||

<syntaxhighlight lang="bash"> | |||

npm i axios | |||

npm i xml2js | |||

</syntaxhighlight> | |||

=Installing NodeJS= | |||

To install nodejs you need to use nvm as ubuntu is always behind the eight ball. Just do the following. Note this changes your profile so you might want to reboot. | |||

<syntaxhighlight lang="bash"> | |||

# Get the nvm manager and install | |||

wget -qO- https://raw.githubusercontent.com/creationix/nvm/v0.39.3/install.sh | bash | |||

# Until you reboot change | |||

source ~/.profile | |||

# Check version of nvm | |||

nvm --version | |||

# List node versions | |||

nvm ls-remote | |||

# Install your fav | |||

nvm install 20.0.0 | |||

# Check working version | |||

node -v | |||

</syntaxhighlight> | |||

=Replace Buffer= | |||

From Sindre Sorhus at [https://sindresorhus.com/blog/goodbye-nodejs-buffer here] | |||

<syntaxhighlight lang="dpatch"> | |||

+import {stringToBase64} from 'uint8array-extras'; | |||

-Buffer.from(string).toString('base64'); | |||

+stringToBase64(string); | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="dpatch"> | |||

import {uint8ArrayToHex} from 'uint8array-extras'; | |||

-buffer.toString('hex'); | |||

+uint8ArrayToHex(uint8Array); | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="dpatch"> | |||

const bytes = getBytes(); | |||

+const view = new DataView(bytes.buffer); | |||

-const value = bytes.readInt32BE(1); | |||

+const value = view.getInt32(1); | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="dpatch"> | |||

import crypto from 'node:crypto'; | |||

-import {Buffer} from 'node:buffer'; | |||

+import {isUint8Array} from 'uint8array-extras'; | |||

export default function hash(data) { | |||

- if (!(typeof data === 'string' || Buffer.isBuffer(data))) { | |||

+ if (!(typeof data === 'string' || isUint8Array(data))) { | |||

throw new TypeError('Incorrect type.'); | |||

} | |||

return crypto.createHash('md5').update(data).digest('hex'); | |||

} | |||

</syntaxhighlight> | |||

Typescript | |||

<syntaxhighlight lang="dpatch"> | |||

-import {Buffer} from 'node:buffer'; | |||

-export function getSize(input: string | Buffer): number { … } | |||

+export function getSize(input: string | Uint8Array): number { … } | |||

</syntaxhighlight> | |||

And add these rules to the eslint for javascript | |||

<syntaxhighlight lang="json"> | |||

{ | |||

"no-restricted-globals": [ | |||

"error", | |||

{ | |||

"name": "Buffer", | |||

"message": "Use Uint8Array instead." | |||

} | |||

], | |||

"no-restricted-imports": [ | |||

"error", | |||

{ | |||

"name": "buffer", | |||

"message": "Use Uint8Array instead." | |||

}, | |||

{ | |||

"name": "node:buffer", | |||

"message": "Use Uint8Array instead." | |||

} | |||

], | |||

} | |||

</syntaxhighlight> | |||

For typescript we can add this to @typescript-eslint/ban-types | |||

<syntaxhighlight lang="json"> | |||

"@typescript-eslint/ban-types": [ | |||

"error", | |||

{ | |||

"extendDefaults": true, | |||

"types": { | |||

"object": false, | |||

"{}": false, | |||

"Buffer": { | |||

"message": "Use Uint8Array instead.", | |||

"suggest": ["Uint8Array"] | |||

} | |||

} | |||

} | |||

], | |||

</syntaxhighlight> | |||

=Module Wars= | |||

==CommonJS== | |||

Node modules were written as CommonJS modules. This means they used | |||

<syntaxhighlight lang="bash"> | |||

// To Exports | |||

module.exports.sum = (x, y) => x + y; | |||

// To Import | |||

const {sum} = require('./util.js'); | |||

</syntaxhighlight> | |||

==ESM== | |||

ESM uses this syntax | |||

<syntaxhighlight lang="bash"> | |||

// To Exports | |||

exports sum = (x, y) => x + y; | |||

// To Import | |||

import {sum} from './util.js'; | |||

</syntaxhighlight> | |||

=Differences Under the hood= | |||

In CommonJS, require() is synchronous; it doesn't return a promise or call a callback. | |||

<br> | |||

In ESM, the module loader runs in asynchronous phases. In the first phase, it parses the script to detect calls to import and export without running the imported script. | |||

Latest revision as of 01:12, 10 January 2024

Setup

Running start up scripts in node

"scripts": {

"start": "run-p start:dev start:api",

"start:dev: "webpack-dev-server --config webpack.config.dev.js --port 3000",

"prestart:api": "node tools/createMockDb.js",

"start:api": "node tools/apiServer.js"

};

The run-p runs multiple jobs, pre<value> runs the command before the value without pre

Adding consts in webpack

To pass dev/production consts we can do this using webpack. Include webpack in you webpack.config.dev.js and webpack.DefinePlugin e.g.

const webpack = require("webpack")

...

plugins: [

new webpack.DefinePlugin({

"process.env.API_URL": JSON.stringify("http:://localhost:3001")

})

],

...

Introduction

Why nodejs

Node is popular because

- Node supports JavaScript

- Non Blocking

- Virtual Machine Single-Thread

Resources

There is a site https://medium.com/edge-coders/how-well-do-you-know-node-js-36b1473c01c8 where u can test your knowledge.

Resource for the course were at https://github.com/jscomplete/advanced-nodejs

Closures

I am really bad at remembering names for things for here is my understanding of Closures

Lexical Scoping

Basically this is driving at the fact there is such a time called scope, maybe new to people in JS at one time. ha ha. I.E. the var is available in init() but not outside of init()

This is an example of lexical scoping, which describes how a parser resolves variable names when functions are nested. The word lexical refers to the fact that lexical scoping uses the location where a variable is declared within the source code to determine where that variable is available. Nested functions have access to variables declared in their outer scope.

function init() {

var name = 'Mozilla'; // name is a local variable created by init

function displayName() { // displayName() is the inner function, a closure

alert(name); // use variable declared in the parent function

}

displayName();

}

init();

Closure Example

And repeat this almost, is that we return the function to be executed later. The values at the time of calling makeFunc are retained. i.e. name is 'Mozilla' if we execute myFunc().

A closure is the combination of a function and the lexical environment within which that function was declared. This environment consists of any local variables that were in-scope at the time the closure was created. In this case, myFunc is a reference to the instance of the function displayName that is created when makeFunc is run. The instance of displayName maintains a reference to its lexical environment, within which the variable name exists.

function makeFunc() {

var name = 'Mozilla';

function displayName() {

alert(name);

}

return displayName;

}

var myFunc = makeFunc();

myFunc();

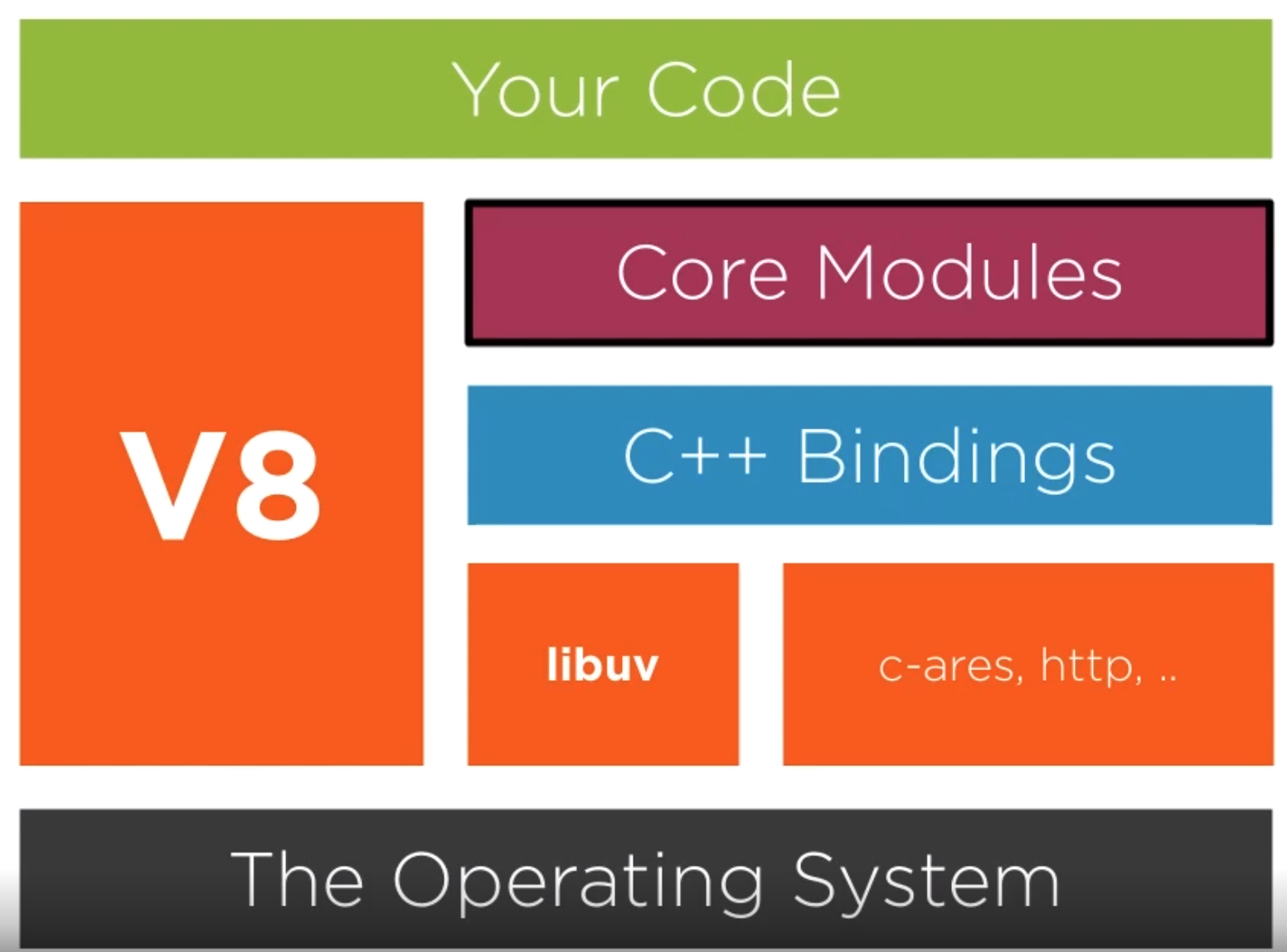

Node Architecture

V8

V8 is Google’s open source high-performance JavaScript and WebAssembly engine, written in C++. It is used in Chrome and in Node.js, among others. It implements ECMAScript and WebAssembly, and runs on Windows 7 or later, macOS 10.12+, and Linux systems that use x64, IA-32, ARM, or MIPS processors. V8 can run standalone, or can be embedded into any C++ application.

libuv is a multi-platform C library that provides support for asynchronous I/O based on event loops. It supports epoll, kqueue, Windows IOCP, and Solaris event ports. It is primarily designed for use in Node.js but it is also used by other software projects.[3] It was originally an abstraction around libev or Microsoft IOCP, as libev supports only select and doesn't support poll and IOCP on Windows.

libuv is a multi-platform C library that provides support for asynchronous I/O based on event loops. It supports epoll, kqueue, Windows IOCP, and Solaris event ports. It is primarily designed for use in Node.js but it is also used by other software projects.[3] It was originally an abstraction around libev or Microsoft IOCP, as libev supports only select and doesn't support poll and IOCP on Windows.

Chackra

This is an alternative to V8. I could find much on this cos I had other stuff to do but here is a diagram to make the page look nice. Note the picture is deliberately smaller.

V8 Node Options

The v8 engine has three feature groups, Shipping, Staged and In Progress. These are like channels and you can see which feature are supported with

node --v8-options | grep "staged"

Node CLI and REPL

REPL

- autocomplete

- up down history

- _ provides last command value

- .blah, .break will get you out of autocomplete, .save will write to history

Global Object

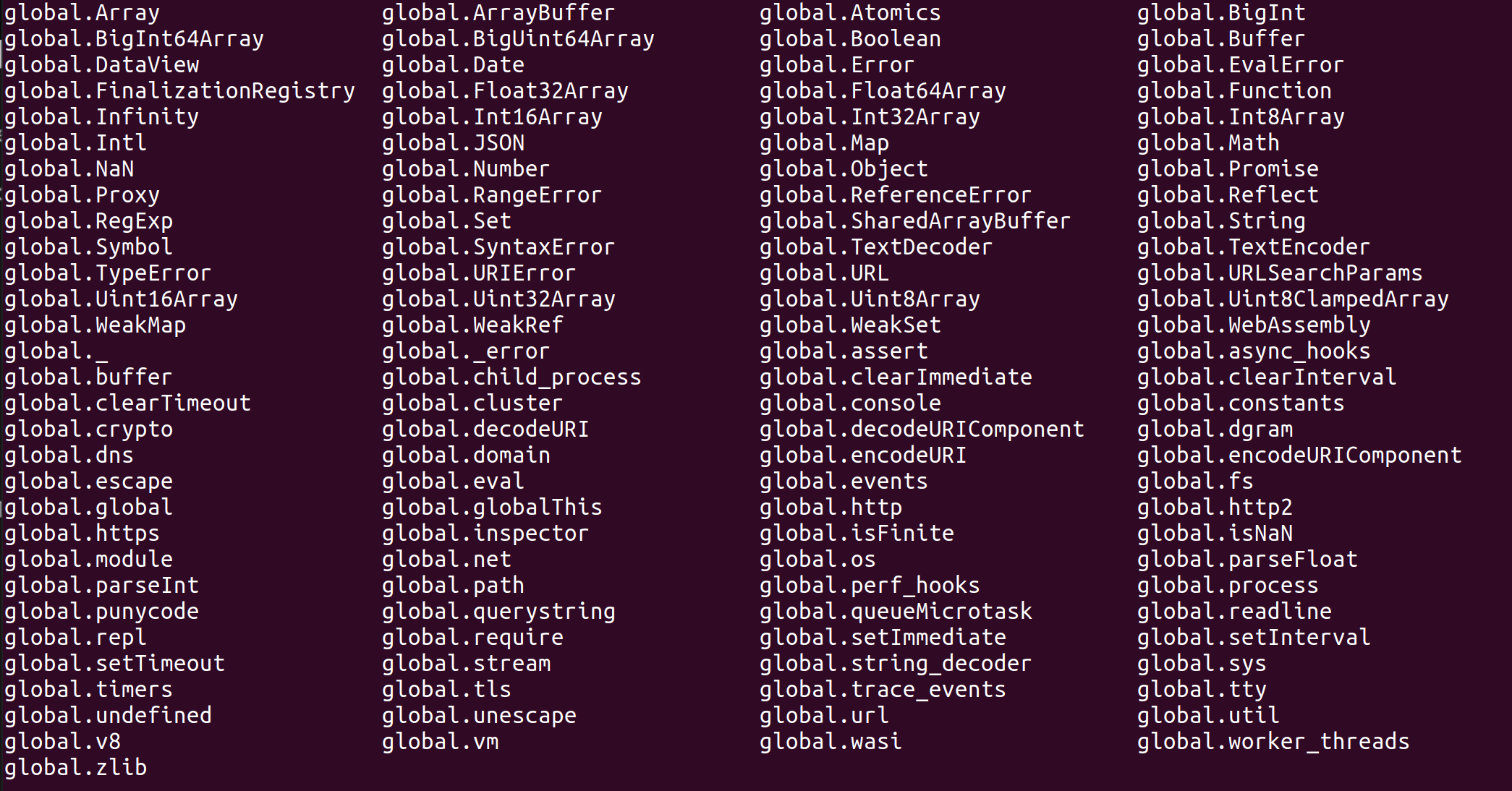

Introduction

The global object supports many functions. This is the core of Node.

For instance you can create a value in global which can be accessed from anywhere

For instance you can create a value in global which can be accessed from anywhere

global.myvar = 10000

An example of usage can be global.process which holds the information for a process started in node. So global.process.env will provide the environment variables.

Taking Process As an Example

In this example we can see that process.on has registered a uncaughtException. If we did not call process.exit(1) this would result in an infinite loop.

// process is an event emitter

process.on('exit', (code) => {

// do one final synchronous operation

// before the node process terminates

console.log(`About to exit with code: ${code}`);

});

process.on('uncaughtException', (err) => {

// something went unhandled.

// Do any cleanup and exit anyway!

console.error(err); // don't do just that.

// FORCE exit the process too.

process.exit(1);

});

// keep the event loop busy

process.stdin.resume();

// trigger a TypeError exception

console.dog();

And the rest

The other functions are like a bash api or c api. They are functions which can be called using javascript.

Require

When we envoke require the following happens

- Resolving

- Evaluating

- Loading

- Wrapping

- Caching

By using the repl we can see how it finds the module.

console.log(module)

Module {

id: '<repl>',

path: '.',

exports: {},

parent: undefined,

filename: null,

loaded: false,

children: [],

paths: [

'/home/iwiseman/dev/scratch/android_frag/repl/node_modules',

'/home/iwiseman/dev/scratch/android_frag/node_modules',

'/home/iwiseman/dev/scratch/node_modules',

'/home/iwiseman/dev/node_modules',

'/home/iwiseman/node_modules',

'/home/node_modules',

'/node_modules',

'/home/iwiseman/.node_modules',

'/home/iwiseman/.node_libraries',

'/usr/local/lib/node'

]

}

With require it will try

- something.js

- something.json

- something.node (compiled add-on file)

// hello.cc

#include <node.h>

namespace demo {

using v8::FunctionCallbackInfo;

using v8::Isolate;

using v8::Local;

using v8::Object;

using v8::String;

using v8::Value;

void Method(const FunctionCallbackInfo<Value>& args) {

Isolate* isolate = args.GetIsolate();

args.GetReturnValue().Set(String::NewFromUtf8(

isolate, "world").ToLocalChecked());

}

void Initialize(Local<Object> exports) {

NODE_SET_METHOD(exports, "hello", Method);

}

NODE_MODULE(NODE_GYP_MODULE_NAME, Initialize)

}

npm

With npm we can install from github directly. E.g. npm i expressjs/express will install the latest express. you can confirm this with npm ls express. You can even install from branches e.g. npm i expressjs/express#4.14.0

- npm updated

Event Emitters

Synchronous Example

These can be used in Node Js just like listeners in other languages. "emit" to send and "on" to perform an action. Below is an example of synchronous events

const EventEmitter = require('events');

class WithLog extends EventEmitter {

execute(taskFunc) {

console.log('Before executing');

this.emit('begin');

taskFunc();

this.emit('end');

console.log('After executing');

}

}

const withLog = new WithLog();

withLog.on('begin', () => console.log('About to execute'));

withLog.on('end', () => console.log('Done with execute'));

withLog.execute(() => console.log('*** Executing task ***'));

Asynchronous Example

const fs = require('fs');

const EventEmitter = require('events');

class WithTime extends EventEmitter {

execute(asyncFunc, ...args) {

console.time('execute');

this.emit('begin');

asyncFunc(...args, (err, data) => {

if (err) {

return this.emit('error', err);

}

this.emit('data', data);

console.timeEnd('execute');

this.emit('end');

});

}

}

const withTime = new WithTime();

withTime.on('begin', () => console.log('About to execute'));

withTime.on('end', () => console.log('Done with execute'));

withTime.execute(fs.readFile, __filename);

Client Server

Really liked how easy it was to built this example. Probably will never use it but the simplicity made me smile. I also like the process.nextTick() to wait for the load to complete from require('./server')(client);

Client

const EventEmitter = require('events');

const readline = require('readline');

const rl = readline.createInterface({

input: process.stdin,

output: process.stdout

});

const client = new EventEmitter();

const server = require('./server')(client);

server.on('response', (resp) => {

process.stdout.write('\u001B[2J\u001B[0;0f');

process.stdout.write(resp);

process.stdout.write('\n\> ');

});

let command, args;

rl.on('line', (input) => {

[command, ...args] = input.split(' ');

client.emit('command', command, args);

});

Server

const EventEmitter = require('events');

class Server extends EventEmitter {

constructor(client) {

super();

this.tasks = {};

this.taskId = 1;

process.nextTick(() => {

this.emit(

'response',

'Type a command (help to list commands)'

);

});

client.on('command', (command, args) => {

switch (command) {

case 'help':

case 'add':

case 'ls':

case 'delete':

this[command](args);

break;

default:

this.emit('response', 'Unknown command...');

}

});

}

tasksString() {

return Object.keys(this.tasks).map(key => {

return `${key}: ${this.tasks[key]}`;

}).join('\n');

}

help() {

this.emit('response', `Available Commands:

add task

ls

delete :id`

);

}

add(args) {

this.tasks[this.taskId] = args.join(' ');

this.emit('response', `Added task ${this.taskId}`);

this.taskId++;

}

ls() {

this.emit('response', `Tasks:\n${this.tasksString()}`);

}

delete(args) {

delete(this.tasks[args[0]]);

this.emit('response', `Deleted task ${args[0]}`);

}

}

module.exports = (client) => new Server(client);

Chat Server

Again just for reference a Chat Server. It shows just how easy software can be. Never used nc before but willing to drop telnet and be an old dog learning new tricks.

const server = require('net').createServer();

let counter = 0;

let sockets = {};

function timestamp() {

const now = new Date();

return `${now.getHours()}:${now.getMinutes()}`;

}

server.on('connection', socket => {

socket.id = counter++;

console.log('Client connected');

socket.write('Please type your name: ');

socket.on('data', data => {

if (!sockets[socket.id]) {

socket.name = data.toString().trim();

socket.write(`Welcome ${socket.name}!\n`);

sockets[socket.id] = socket;

return;

}

Object.entries(sockets).forEach(([key, cs]) => {

if (socket.id == key) return;

cs.write(`${socket.name} ${timestamp()}: `);

cs.write(data);

});

});

socket.on('end', () => {

delete sockets[socket.id];

console.log('Client disconnected');

});

});

server.listen(8000, () => console.log('Server bound'));

DNS

There is a dns module which support dns.reverse and dns.lookup.

HTTP

Simple HTTP Server Example

This demonstrate that the server will stream by default

const server = require('http').createServer();

server.on('request', (req, res) => {

res.writeHead(200, { 'content-type': 'text/plain' });

res.write('Hello world\n');

setTimeout(function () {

res.write('Another Hello world\n');

}, 10000);

setTimeout(function () {

res.write('Yet Another Hello world\n');

}, 20000);

// res.end('We want it to stop');

});

// server.timeout = 100000 No go on forever!

server.listen(8000)

Simple HTTPS Server Example

And with https

const fs = require('fs');

const server = require('https')

.createServer({

key: fs.readFileSync('./key.pem'),

cert: fs.readFileSync('./cert.pem'),

});

server.on('request', (req, res) => {

res.writeHead(200, { 'content-type': 'text/plain' });

res.end('Hello world\n');

});

server.listen(443);

Simple Routing Server Example

Just loving how simple this all is and comparing it to my golang solution. I should be easy because it is but this is reminding me of what I already know. Top tip node -p http.STATUS_CODES

const fs = require('fs');

const server = require('http').createServer();

const data = {};

server.on('request', (req, res) => {

switch (req.url) {

case '/api':

res.writeHead(200, { 'Content-Type': 'application/json' });

res.end(JSON.stringify(data));

break;

case '/home':

case '/about':

res.writeHead(200, { 'Content-Type': 'text/html' });

res.end(fs.readFileSync(`.${req.url}.html`));

break;

case '/':

res.writeHead(301, { 'Location': '/home' });

res.end();

break;

default:

res.writeHead(404);

res.end();

}

});

server.listen(8000);

Simple HTTPS Client Example

const https = require('https');

// req: http.ClientRequest

const req = https.get(

'https://www.google.com',

(res) => {

// res: http.IncomingMessage

console.log(res.statusCode);

console.log(res.headers);

res.on('data', (data) => {

console.log(data.toString());

});

}

);

req.on('error', (e) => console.log(e));

console.log(req.agent); // http.Agent

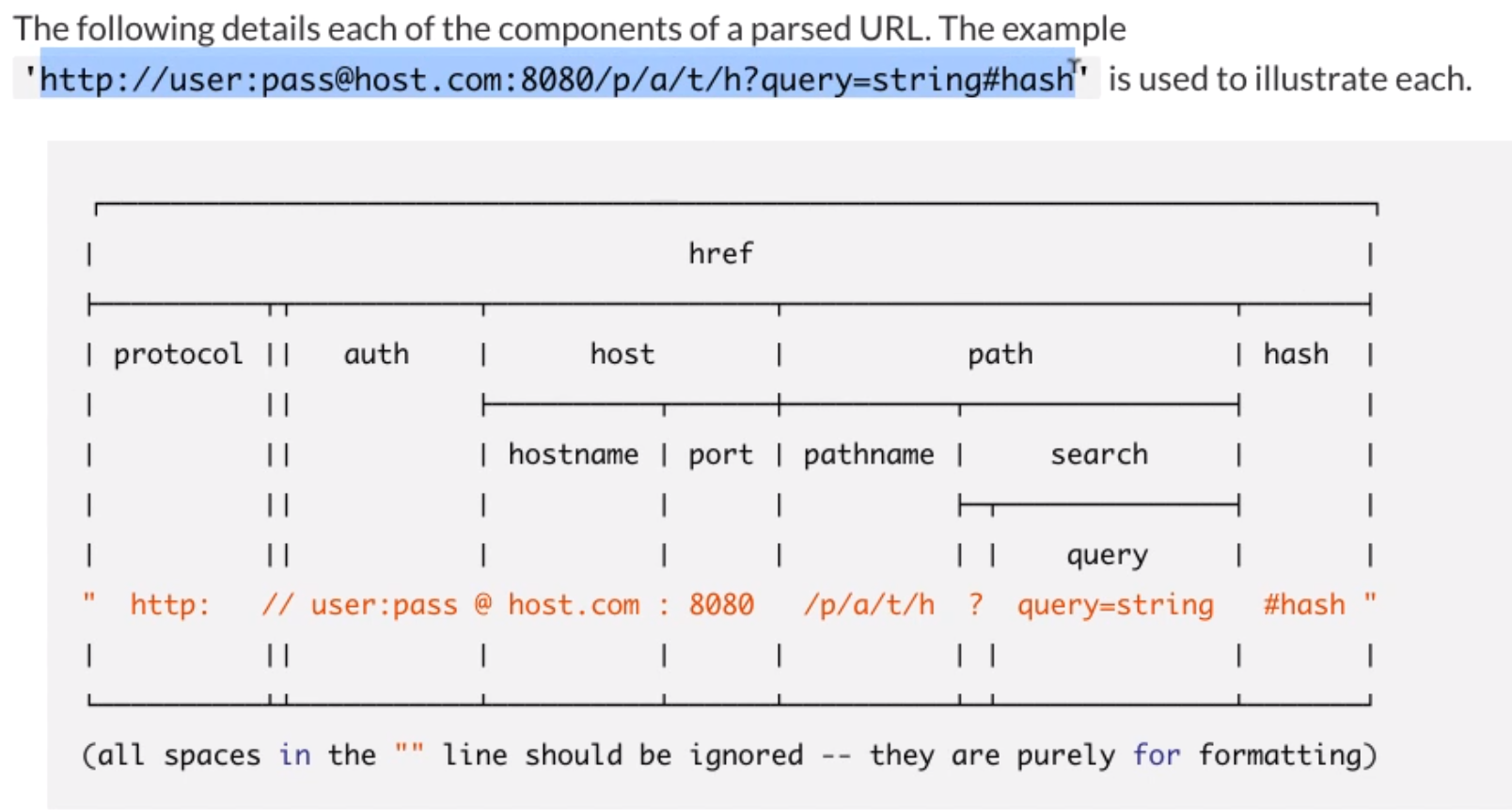

Simple HTTPS Client Example

Here is a diagram from the node documentation showing the various parts of a URL.

We can use url.parse to see the varsion parts in an object. e.g.

We can use url.parse to see the varsion parts in an object. e.g.

const urlString = 'https://www.pluralsight.com/search?q=buna';

...

// Output

Url {

protocol: 'https:',

slashes: true,

auth: null,

host: 'www.pluralsight.com',

port: null,

hostname: 'www.pluralsight.com',

hash: null,

search: '?q=buna',

query: [Object: null prototype] { q: 'buna' },

pathname: '/search',

path: '/search?q=buna',

href: 'https://www.pluralsight.com/search?q=buna'

}

Equal we can reverse this with

const urlObject = {

protocol: 'https',

host: 'www.pluralsight.com',

search: '?q=buna',

pathname: '/search',

};

url.format(urlObject)

// Output

// 'https://www.pluralsight.com/search?q=buna'

Built in Libraries

OS Module

This provides a ton of information around the os. E.g. version. From my old days I did like the node -p os.constants.signals

FS Module

This provides sync and async function for reading and writing. Here is an example script showing how to use it. It watches and log file changes to the directory.

const fs = require('fs');

const path = require('path');

const dirname = path.join(__dirname, 'files');

const currentFiles = fs.readdirSync(dirname);

const logWithTime = (message) =>

console.log(`${new Date().toUTCString()}: ${message}`);

fs.watch(dirname, (eventType, filename) => {

if (eventType === 'rename') { // add or delete

const index = currentFiles.indexOf(filename);

if (index >= 0) {

currentFiles.splice(index, 1);

logWithTime(`${filename} was removed`);

return;

}

currentFiles.push(filename);

logWithTime(`${filename} was added`);

return;

}

logWithTime(`${filename} was changed`);

});

Console

You can use console to time the functions

console.time("test 1")

// My large jobby

...

console.timeEnd("test 1")

Streams

Types of Streams

- Readable (fs.createReadStream)

- Writable (fs.createWriteStream)

- Duplex (net.Socket)

- Transform (zlib.createGzip)

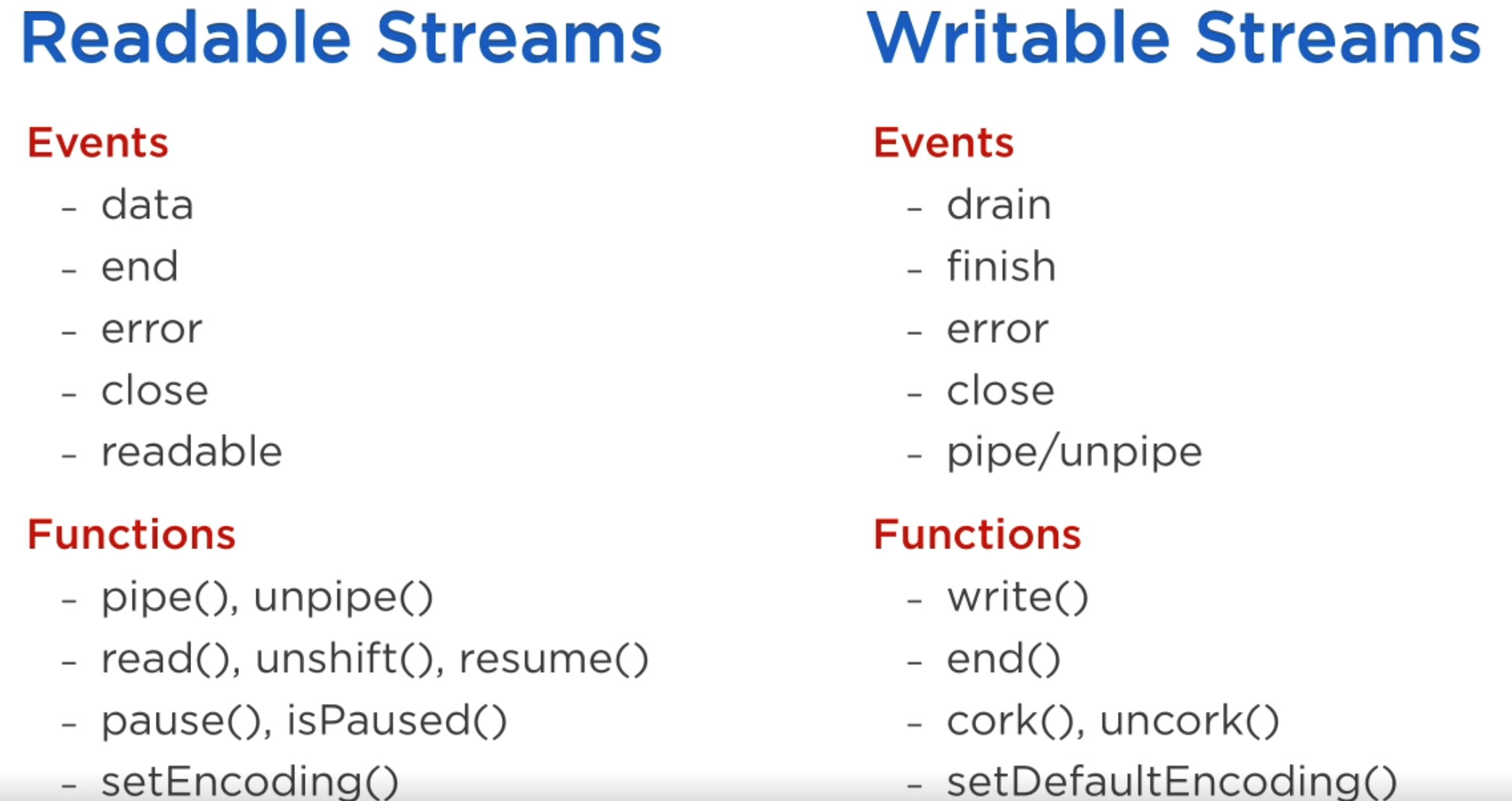

Functions and Events

The following show a list of some of the functions and events streams support. Import events for readable are data and end. For Writable it would be drain event to signal it and consume more data and finish

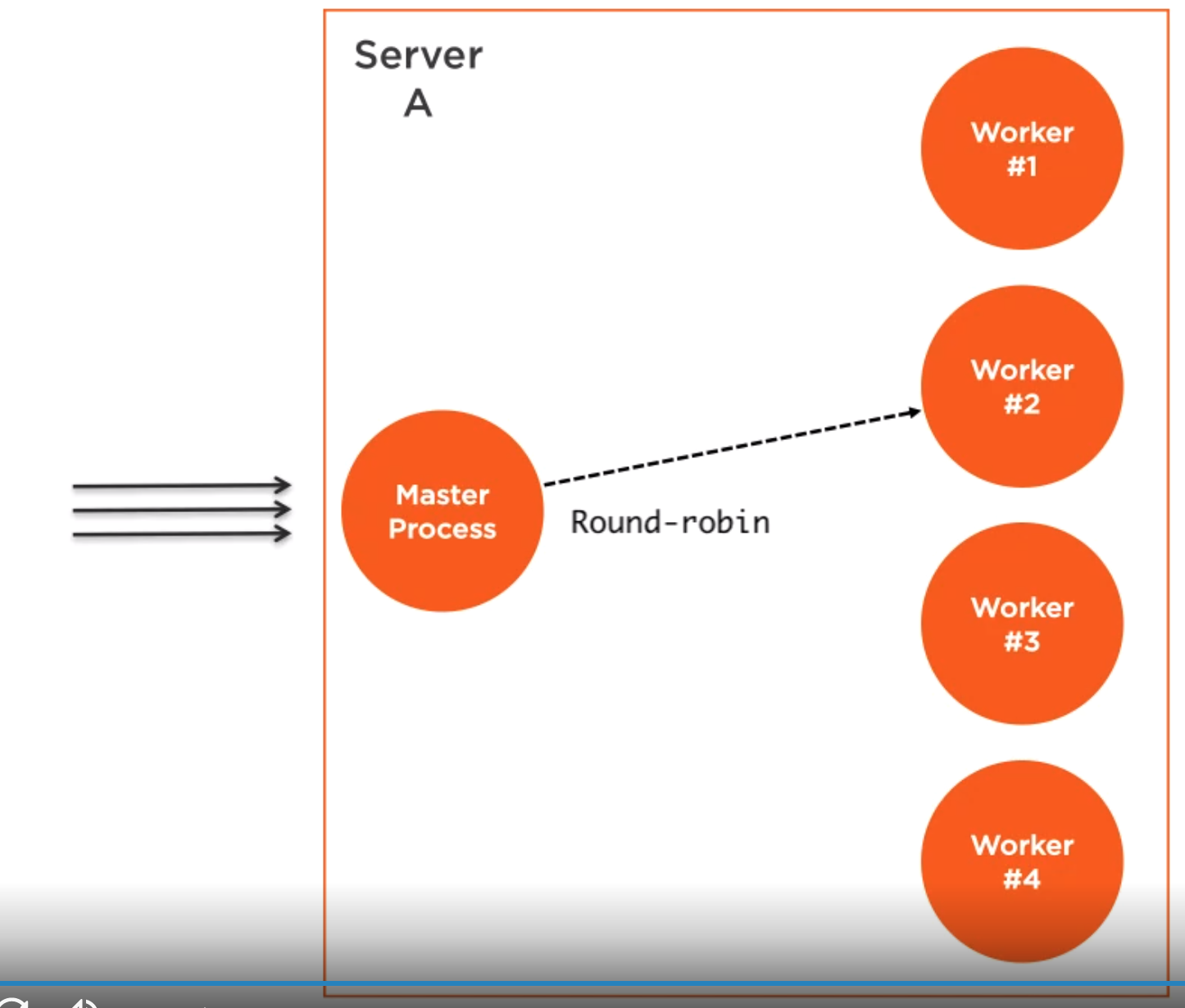

Clusters and Child Process

Node js supports the linux concepts of

- spawn (no new shell)

- exec (creates a shell)

- execFile

- fork

The cluster module allows up to split the processing using the fork. We can use it for load balancing.

In the demonstration the apachebench tool was used loading 200 connections for 10 seconds. You can look at the Requests per second for a guide

In the demonstration the apachebench tool was used loading 200 connections for 10 seconds. You can look at the Requests per second for a guide

ab -c200 -t10 http://localhost:8000/

Test server code

const http = require('http');

const pid = process.pid;

http.createServer((req, res) => {

for (let i=0; i<1e7; i++); // simulate CPU work

res.end(`Handled by process ${pid}`);

}).listen(8080, () => {

console.log(`Started process ${pid}`);

});

Cluser Code

const cluster = require('cluster');

const os = require('os');

if (cluster.isMaster) {

const cpus = os.cpus().length;

console.log(`Forking for ${cpus} CPUs`);

for (let i = 0; i<cpus; i++) {

cluster.fork();

}

} else {

require('./server');

}

Middleware

Probably should go somewhere else but a nice thing to learn. Middleware is called on a route and then we either call next or return. To in this case we are getting a value for all routes which have :bookId

router.use('/books/:bookId', async (req, res, next) => {

Book.findById(req.params.bookId, async (err, book) => {

if (err) {

return res.send(err);

}

if (book) {

req.book = book;

return next();

}

return res.sendStatus(404);

});

});

Now we can replace the function which found the books with the value from the request added by the middeware. Before is

router.put('/books/:bookId', async (req, res) => {

Book.findById(req.params.bookId, async (err, book) => {

if (err) {

return res.send(err);

}

book.title = req.body.title;

book.author = req.body.author;

book.genre = req.body.genre;

book.read = req.body.read;

book = await book.save();

return res.json(book);

});

});

So this now becomes

router.put('/books/:bookId', async (req, res) => {

const { book } = req;

book.title = req.body.title;

book.author = req.body.author;

book.genre = req.body.genre;

book.read = req.body.read;

await req.book.save();

return res.json(book);

});

More Node

Standards

Goto https://node.green/ for feature list and when

NVM

Useful Commands

We can deal with Node version using nvm. This allows you to switch between versions of Node.

To show current

nvm current

To show installed

nvm ls

To set the default we can type

nvm alias default 20.0.0

Issues

For me this did not work because I have a directory in ${HOME}/node_modules. Renaming/Removing this made it work again. I also have a file (which does not fix this but thought was use setnode.sh

#!/usr/bin/bash

# initialize nvm

export NVM_DIR="$HOME/.nvm"

[ -s "$NVM_DIR/nvm.sh" ] && \. "$NVM_DIR/nvm.sh" # This loads nvm

DEFAULT_NVM_VERSION=20

nvm cache clear

nvm install $DEFAULT_NVM_VERSION

nvm alias default $DEFAULT_NVM_VERSION

NVERS=$(nvm ls --no-alias | grep -v -- "->" | grep -o "v[0-9.]*")

while read ver; do nvm uninstall $ver; done <<< $NVERS

while read ver; do nvm install $ver; done <<< $NVERS

nvm use $DEFAULT_NVM_VERSION

Starting a Project

To start a project type. This creates a package.json.

npm init

Package Versioning

- ~ Means will update as package with subsequent npm i

- ^ Means will update it will only update to the . release e.g. 4.1.x will only update to packages starting with 4.1

npmrc

We can create a .npmrc in the home directory. This has options which seems pretty pointless to me.

progress=false

save=true

save-exact=true

Web Page

Lint

Let's start with the airbnb setup. First non-dev

npm i airbnb

And now dev

npm i eslint eslint-config-airbnb eslint-plugin-import eslint-plugin-jsx-a11y eslint-plugin-react eslint-plugin-react-hooks --save-dev

And our eslintrc.json

{

"extends": "airbnb",

"rules": {

"import/no-extraneous-dependencies": ["error", {"devDependencies": ["**/*Test.js"]}]

},

"env" : {"node": true, "mocha": true }

And a Simple Express App

const express = require('express');

const app = express();

app.get('/', (req, res) => {

res.send('hello');

});

app.listen(3000, () => {

console.log('Listening on a port');

});

Debugging

Chalk

Chelk allows you to set colors to messages. e.g.

console.log('listening on port ' + chalk.green('3000'));

debug

This allows you to not output string for production. You specify the environment variable debug e.g. Express uses the debug package too!

DEBUG=* node app.js // for all

DEBUG=app node app.js // for app

morgon

Morgon logs web traffic to the console so you don't need the browser.

var morgon = require('morgon')

app.use(morgon('combined')) // lots

app.use(morgon('tiny')) // not lots

Serving html

Basic

This were filed under views. To render these you use res.sendFile(path.join(__dirname,'views','index.html')

Bootstrap

This was my way.

Install bootstrap jquery

npm i bootstrap jquery --save

Add to app.js

const path = require('path');

const app = express();

app.use('/css', express.static(path.join(__dirname, 'node_modules/bootstrap/dist/css')));

app.use('/js', express.static(path.join(__dirname, 'node_modules/bootstrap/dist/js')));

app.use('/js', express.static(path.join(__dirname, 'node_modules/jquery/dist')));

Use in the html

<html>

<head>

<!-- Bootstrap CSS -->

<link

rel="stylesheet"

href="https://stackpath.bootstrapcdn.com/bootstrap/4.5.0/css/bootstrap.min.css"

integrity="sha384-9aIt2nRpC12Uk9gS9baDl411NQApFmC26EwAOH8WgZl5MYYxFfc+NcPb1dKGj7Sk"

crossorigin="anonymous"

/>

</head>

<body>

<div class="jumbotron">

<div class="container">Serving up truncatechars_html</div>

<button>FRED</button>

</div>

</body>

</html>

Templating Engines

Introduction

The two big engines are

- Pug

- EJS

Pug

This removes lots of boiler plate. Possibly the yaml for html

app.set("views","./src/view")

app.set("view engine", "pug")

An Example

doctype html

html(lang='en')

head

title Hello, World!

body

h1 Hello, World!

div.remark

p Pug rocks!

EJS

This is called extended Java Script. This is like the MS approach with special tags.

app.set("views","./src/view")

app.set("view engine", "ejs")

An example

<!DOCTYPE html>

<html lang="en">

<head>

<%- include('../partials/head'); %>

</head>

<body class="container">

<header>

<%- include('../partials/header'); %>

</header>

<main>

<div class="row">

<div class="col-sm-8">

<div class="jumbotron">

<h1>This is great</h1>

<p>Welcome to templating using EJS</p>

</div>

</div>

<div class="col-sm-4">

<div class="well">

<h3>Look I'm A Sidebar!</h3>

</div>

</div>

</div>

</main>

<footer>

<%- include('../partials/footer'); %>

</footer>

</body>

</html>

Templates

Introduction

To start the web page they went to http://www.bootstrapzero.com. No way to download without pro from codeply sigh! In my case I downloaded storystrap. You copy the 3 bits css, js and html to the right place and check you are happy with the locations of static files. Note this template using bootstrap 3.7 and does not work with bootstrap 5.

So now we have

So with ejs we can create a space for the navigation before in the template.

<ul class="nav navbar-nav">

<li>

<a href="#">Category</a>

</li>

<li>

<a href="#">Category</a>

</li>

<li>

<a href="#">Category</a>

</li>

<li>

<a href="#">Category</a>

</li>

</ul>

Becomes

<%for(let i=0; i<nav.length; i++) {%>

<li>

<a href=<%=nav[i].link%>><%=nav[i].title%> </a>

</li>

<%}%>

End Solution

const express = require('express');

const chalk = require('chalk');

const debug = require('debug')('app');

const morgan = require('morgan');

const path = require('path');

const app = express();

const port = process.env.PORT || 3010;

app.use(morgan('tiny'));

app.use(express.static(path.join(__dirname, '/public/')));

app.use('/css', express.static(path.join(__dirname, '/node_modules/bootstrap/dist/css')));

app.use('/js', express.static(path.join(__dirname, '/node_modules/bootstrap/dist/js')));

app.use('/js', express.static(path.join(__dirname, '/node_modules/jquery/dist')));

app.set('views', './src/views');

app.set('view engine', 'ejs');

app.get('/', (req, res) => {

res.render('index', {

nav: [

{ link: '/books', title: 'Books' },

{ link: '/authors', title: 'Authors' },

],

title: 'Library',

});

});

app.listen(port, () => {

debug(`listening on port ${chalk.green(port)}`);

});

Routing

Define Controller

A quick reminder of routing in the nodejs. We create our controller and return the functions we are going to implement.

...

function bookController(dbConnection) {

async function performGetAll(req, res) {

const request = new sql.Request();

}

async function performGet(req, res) {

}

return {

performGetAll,

performGet,

};

}

module.exports = bookController;

...

Define Router

This defines our routes we are going to be managing. We can pass objects to the controller. E.g. DB Connection.

const express = require('express');

const bookController = require('../controllers/bookController');

function routes(nav) {

const router = express.Router();

const controller = bookController(dbConnection);

router.get('/books', controller.performGetAll);

router.get('/books/:id', controller.performGet);

return router;

}

module.exports = routes;

Create Router in App

We create our router, we can have many. Passing in any parameters.

const bookRouter = require('./src/routes/bookRouter')(dbConnection);

app.use('/', bookRouter);

Very Basic Authentication

Must research this but here goes

Packages

npm i passport

npm i cookie-parser

npm i express-session

Express Session

We need to make sure that our sessions are secure therefore we need to be careful when creating and storing information in a session.

app.use(session({

secret: ['veryimportantsecret','notsoimportantsecret','highlyprobablysecret'],

name: "secretname", // this helps reduce risk for fingerprinting

cookie: {

httpOnly: true, // do not let browser javascript access cookie ever

secure: true, // only use cookie over https

sameSite: true, // true blocks CORS requests on cookies.

maxAge: 600000, // time is in miliseconds i.e. 600 seconds

ephemeral: true, // delete this cookie while browser close

}

}))

Express Setup

Some these will of course exist but the complete list is for ordering purposes. The main one is the passport config where we are going to store our passport processing.

const express = require('express');

const cookieParser = require('cookie-parser');

const session = require('express-session');

const morgan = require('morgan');

const path = require('path');

const nav = {

nav: [{ link: '/books', title: 'Books' },

{ link: '/authors', title: 'Authors' }],

title: 'Library',

};

const homeRouter = require('../routes/homeRouter')(nav);

const bookRouter = require('../routes/bookRouter')(nav);

const adminRouter = require('../routes/adminRouter')(nav);

const authRouter = require('../routes/authRouter')();

function expressApp(baseDirectory) {

// Instance of express

const expressInstance = express();

// Needed to read body data

expressInstance.use(express.json());

// Needed to cookies

expressInstance.use(cookieParser());

// Needed to cookies

expressInstance.use(session({

secret: 'keyboard cat',

resave: true,

saveUninitialized: true,

}));

require('./passport/passportConfig')(expressInstance);

// Support URL encoded

expressInstance.use(express.urlencoded({ extended: true }));

expressInstance.use(morgan('tiny'));

expressInstance.use(express.static(path.join(baseDirectory, '/public/')));

expressInstance.use('/css', express.static(path.join(baseDirectory, '/node_modules/bootstrap/dist/css')));

expressInstance.use('/js', express.static(path.join(baseDirectory, '/node_modules/bootstrap/dist/js')));

expressInstance.use('/js', express.static(path.join(baseDirectory, '/node_modules/jquery/dist')));

expressInstance.set('views', './src/views');

expressInstance.set('view engine', 'ejs');

expressInstance.use('/books', bookRouter);

expressInstance.use('/admin', adminRouter);

expressInstance.use('/auth', authRouter);

expressInstance.use('/', homeRouter);

return expressInstance;

}

module.exports = (baseDirectory) => expressApp(baseDirectory);

Passport Config

This is where the passport framework elements live and we keep them in config/passport/passport.js. This could define what our user will look like once authenticated. Note the export with the parameter

const passport = require('passport');

const debug = require('debug')('app:passport');

require('./strategies/local.strategy')();

function passportConfig(app) {

app.use(passport.initialize());

app.use(passport.session());

// Store the user

passport.serializeUser((user, done) => {

done(null, user);

});

// Retrieve the user

passport.deserializeUser((user, done) => {

done(null, user);

});

}

module.exports = (app) => passportConfig(app);

Passport Strategy

This is where we get to write how it works in terms of authentication. Valid or not valid.

const passport = require('passport');

const { Strategy } = require('passport-local');

const mongoRepository = require('../../../repository/mongoRepository');

function localStrategy() {

const repository = mongoRepository();

// Strategy takes an definition for where to get user name and

// A function which will provide these

passport.use(new Strategy(

{

usernameField: 'username',

passwordField: 'password',

},

(username, password, done) => {

repository.getUser(username).then((user) => {

if (user.password === password) {

done(null, user);

} else {

done(null, false);

}

});

},

));

}

module.exports = () => localStrategy();

Router

It is the passport.authenticate which is important.

const express = require('express');

const passport = require('passport');

const authController = require('../controllers/authController');

function authRouter() {

const router = express.Router();

const controller = authController();

router.post('/signUp', controller.performSignUp);

router.post('/signIn', passport.authenticate('local', {

successRedirect: '/auth/profile',

failureRedirect: '/',

}));

router.get('/profile', controller.performProfile);

return router;

}

module.exports = authRouter;

Controller

Nothing special but here for reference

const debug = require('debug')('app:authController');

const mongoRepository = require('../repository/mongoRepository');

function authController() {

const repository = mongoRepository();

async function performSignUp(req, res) {

const { username, password } = req.body;

const user = await repository.createUser(username, password);

req.login(user, () => {

res.redirect('/auth/profile');

});

}

async function performProfile(req, res) {

debug('Doing profile');

res.json(req.user);

}

return {

performSignUp, performProfile,

};

}

module.exports = authController;

Guarding Routes

We can use middleware to check for the user. If it is not there then computer says no.

router.use('/', async (req, res, next) => {

if (req.user) {

return next();

}

return res.redirect('/');

});

Databases

MS SQL Example

Instruction for MS SQL can be found MS SQL Setup Nothing too shocking here.

Connect to DB

const sql = require('mssql');

const app = express();

const port = process.env.PORT || 3010;

const config = {

user: process.env.DB_USER,

password: process.env.DB_PWD,

database: process.env.DB_NAME,

server: 'localhost',

port: 1433,

pool: {

max: 10,

min: 0,

idleTimeoutMillis: 30000,

},

options: {

encrypt: false, // for azure

trustServerCertificate: true, // change to true for local dev / self-signed certs

},

};

sql.connect(config).catch(debug((err) => debug(err)));

Perform a Query

This is asynchronous so we will need to wait for our results when we use the connection. Here is an example of that.

const request = new sql.Request();

const result = await request.query('select * from books');

// The result are in the object result set

res.render(

'bookListView',

{

nav,

title: 'Library',

books: result.recordset,

},

);

Perform a Query with Parameters

We can use parameters with this syntax

const request = new sql.Request();

const result = await request.input('id', sql.Int, id)

.query('select * from books where id = @id');

Mongo DB

Here we are going to use mongo db to achieve the same goal as MS SQL. With this we will be using mongodb which is a schemaless approach unlike mongoose.

Connect to DB

This connects to the DB but also inserts the records into the database based off an object books which is not defined here.

const { MongoClient } = require('mongodb');

...

const url = 'mongodb://localhost:27017';

const dbName = 'libraryApp';

const client = await MongoClient.connect(url);

const db = client.db(dbName);

const response = await db.collection('books').insertMany(books);

res.json(response);

client.close();

We can checked this worked at the cli using

show dbs

use libraryApp

db.books.find().pretty()

Reading All or one

Clearly we need to pass the connection around and some error handling on failure.

const { ObjectId, MongoClient } = require('mongodb');

const url = 'mongodb://localhost:27017';

const dbName = 'libraryApp';

const client = await MongoClient.connect(url);

const db = client.db(dbName);

const collection = await db.collection('books');

// For all

const books = await collection.find().toArray();

// And for one

const book = await collection.findOne({_id: new ObjectID(id)

client.close();

});

External Service Example

This is an example of calling a service in nodejs. With this service we receive the data with axios and in the format of xml. So as ever lets install

npm i axios

npm i xml2js

Installing NodeJS

To install nodejs you need to use nvm as ubuntu is always behind the eight ball. Just do the following. Note this changes your profile so you might want to reboot.

# Get the nvm manager and install

wget -qO- https://raw.githubusercontent.com/creationix/nvm/v0.39.3/install.sh | bash

# Until you reboot change

source ~/.profile

# Check version of nvm

nvm --version

# List node versions

nvm ls-remote

# Install your fav

nvm install 20.0.0

# Check working version

node -v

Replace Buffer

From Sindre Sorhus at here

+import {stringToBase64} from 'uint8array-extras';

-Buffer.from(string).toString('base64');

+stringToBase64(string);

import {uint8ArrayToHex} from 'uint8array-extras';

-buffer.toString('hex');

+uint8ArrayToHex(uint8Array);

const bytes = getBytes();

+const view = new DataView(bytes.buffer);

-const value = bytes.readInt32BE(1);

+const value = view.getInt32(1);

import crypto from 'node:crypto';

-import {Buffer} from 'node:buffer';

+import {isUint8Array} from 'uint8array-extras';

export default function hash(data) {

- if (!(typeof data === 'string' || Buffer.isBuffer(data))) {

+ if (!(typeof data === 'string' || isUint8Array(data))) {

throw new TypeError('Incorrect type.');

}

return crypto.createHash('md5').update(data).digest('hex');

}

Typescript

-import {Buffer} from 'node:buffer';

-export function getSize(input: string | Buffer): number { … }

+export function getSize(input: string | Uint8Array): number { … }

And add these rules to the eslint for javascript

{

"no-restricted-globals": [

"error",

{

"name": "Buffer",

"message": "Use Uint8Array instead."

}

],

"no-restricted-imports": [

"error",

{

"name": "buffer",

"message": "Use Uint8Array instead."

},

{

"name": "node:buffer",

"message": "Use Uint8Array instead."

}

],

}

For typescript we can add this to @typescript-eslint/ban-types

"@typescript-eslint/ban-types": [

"error",

{

"extendDefaults": true,

"types": {

"object": false,

"{}": false,

"Buffer": {

"message": "Use Uint8Array instead.",

"suggest": ["Uint8Array"]

}

}

}

],

Module Wars

CommonJS

Node modules were written as CommonJS modules. This means they used

// To Exports

module.exports.sum = (x, y) => x + y;

// To Import

const {sum} = require('./util.js');

ESM

ESM uses this syntax

// To Exports

exports sum = (x, y) => x + y;

// To Import

import {sum} from './util.js';

Differences Under the hood

In CommonJS, require() is synchronous; it doesn't return a promise or call a callback.

In ESM, the module loader runs in asynchronous phases. In the first phase, it parses the script to detect calls to import and export without running the imported script.