Neural Networks: Difference between revisions

| Line 20: | Line 20: | ||

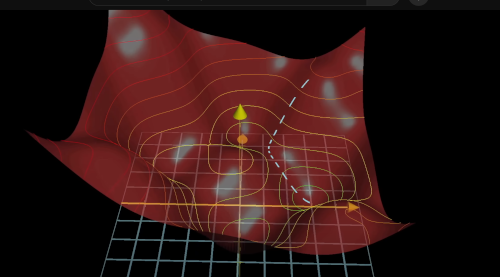

And here we are in 3D looking to the lowest point in the function<br> | And here we are in 3D looking to the lowest point in the function<br> | ||

[[File:Nn7.png|500px]]<br> | [[File:Nn7.png|500px]]<br> | ||

This walking down to find the lowest point by stepping slowly and testing is known as gradient descent. | |||

Latest revision as of 19:45, 21 January 2025

Introduction

It is 2025 and time for me to spend more effort in understanding AI a bit more. So I will start, hopefully, at the beginning

What are Neural Networks

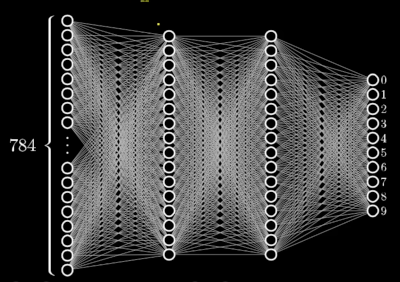

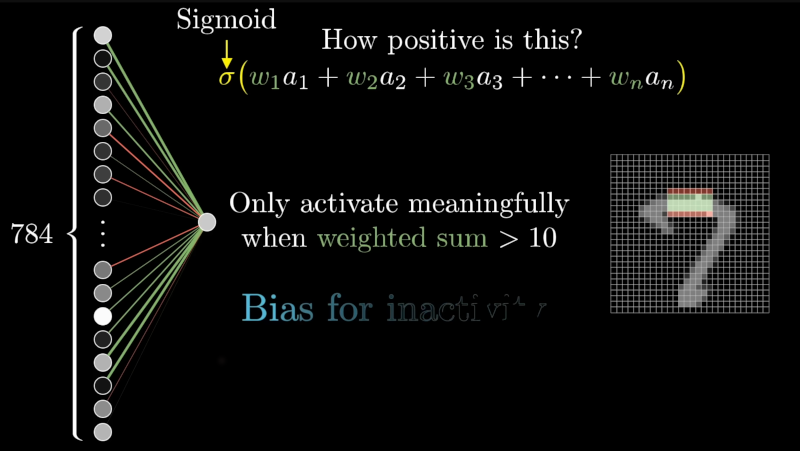

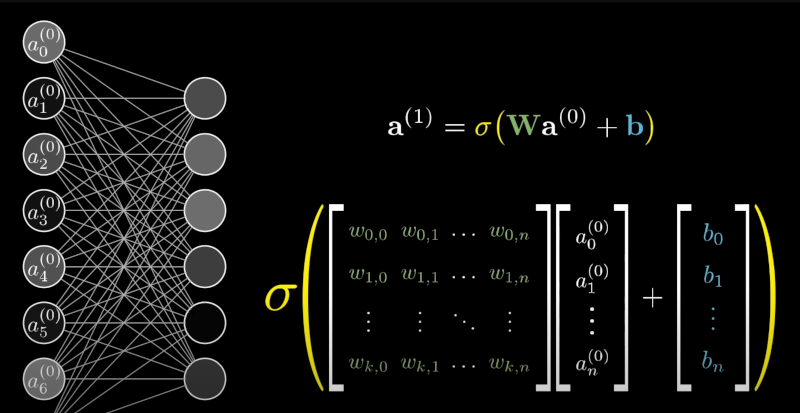

Watching the YouTube [here], it showed an example network which would be used to identify a digit in a 28 x 28 pixel picture. The video talks about about breaking the pixels up and passing them through layers. The layers determine a value for the thing you are looking for which comprises of a meaurement + weight + bias

For each neuron in one layer the result of the thing you are looking is calculated along with the weight and bias

This can be expressed as

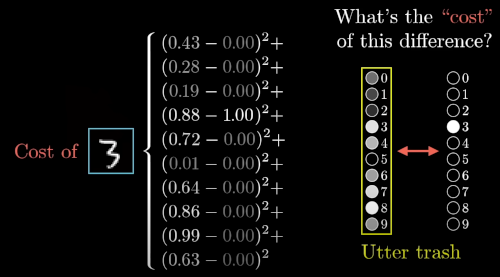

So I was expecting to somewhere define what to look for to be certain. E.g. the edges, lack of any pixels etc. But the video went of to speak about training and spoke of the cost function and taking the worst examples and squaring the results. To be honest I will need to buck up my linear maths to make some sense of what was going on. But knowing what you don't know is a start

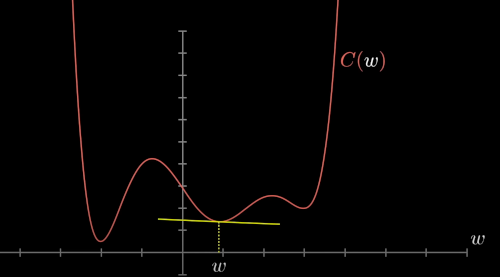

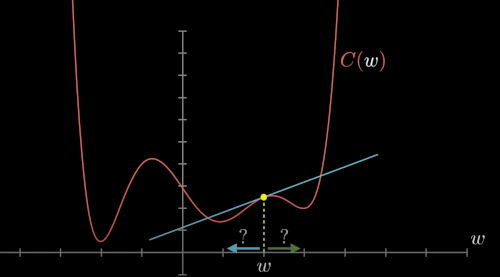

Next we looked at finding the minimum of the function. Kinda understand what but not why.

They mentioned

- taking a random point in the function,

- calculating the slope of the function where you are,

- looking for the direction of the slope to determine which direction to move to get the smallest value,

- a positive slope, move left, if negative slope, move to the right

And here we are in 3D looking to the lowest point in the function

This walking down to find the lowest point by stepping slowly and testing is known as gradient descent.