LLM and Ollama: Difference between revisions

Jump to navigation

Jump to search

No edit summary |

|||

| Line 2: | Line 2: | ||

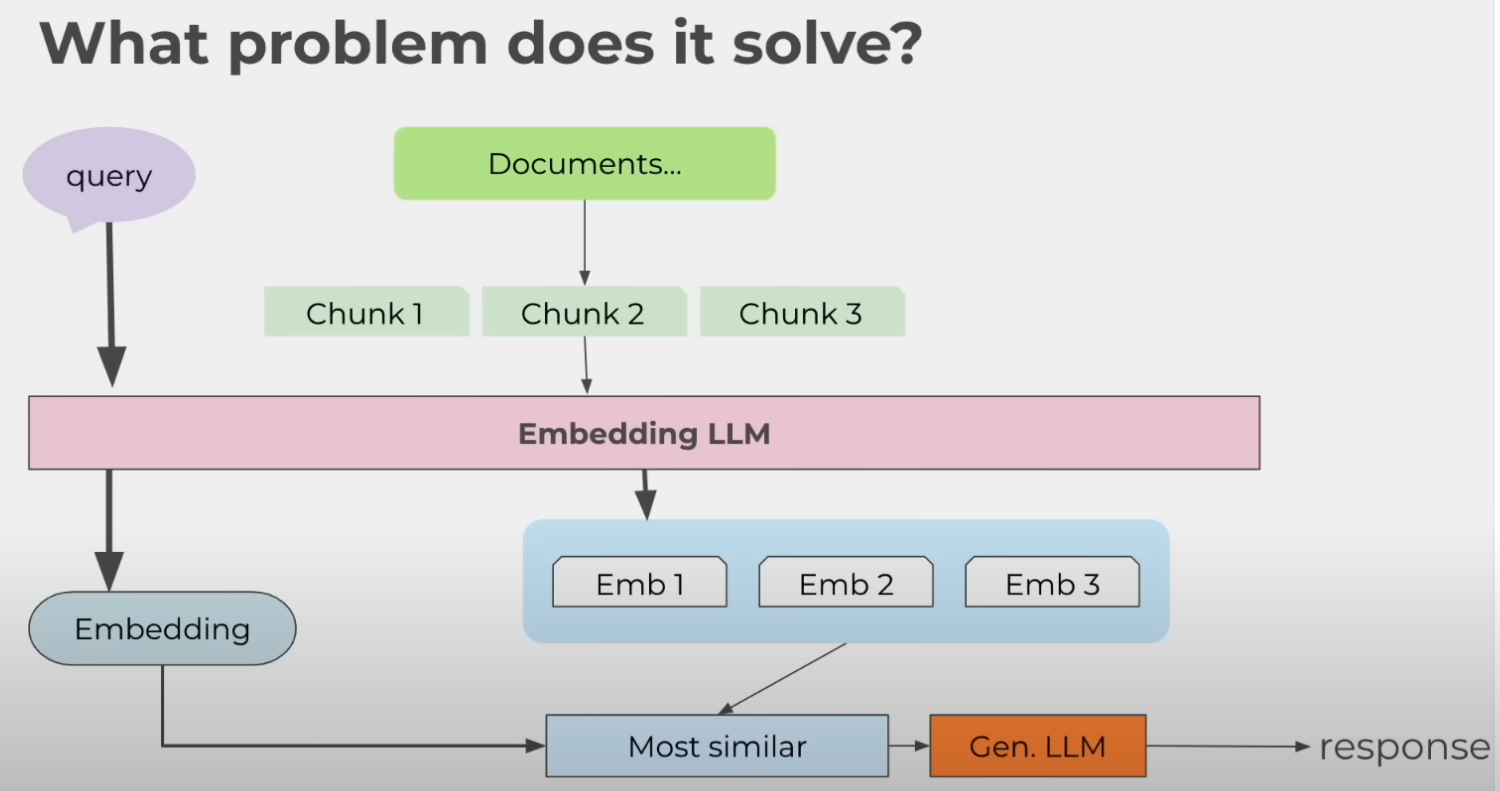

This is a page about ollama and you guest it LLM. I have downloaded several models and got a UI going over them locally. The plan is to build something like Claude desktop in Typescript to Golang. First some theory in Python from [https://youtu.be/GWB9ApTPTv4?si=kP2V0AOANH8rEiDm here]. Here is the problem I am trying to solve.<br> | This is a page about ollama and you guest it LLM. I have downloaded several models and got a UI going over them locally. The plan is to build something like Claude desktop in Typescript to Golang. First some theory in Python from [https://youtu.be/GWB9ApTPTv4?si=kP2V0AOANH8rEiDm here]. Here is the problem I am trying to solve.<br> | ||

[[File:Ollama problem.png|500px]]<br> | [[File:Ollama problem.png|500px]]<br> | ||

=Using the Remote Ollama= | |||

You can connect by setting the host with | |||

<syntaxhighlight lang="bash"> | |||

export OLLAMA_HOST=192.blah.blah.blah | |||

</syntaxhighlight> | |||

Now you can use with | |||

<syntaxhighlight lang="bash"> | |||

ollama run llama3.2:latest | |||

</syntaxhighlight> | |||

Taking lamma3.2b as an example | |||

=Model Info= | |||

*Architecture:llama Who made it | |||

*Parameters:3.2B - Means 3.2 billion parameters (bigger requires more resources) | |||

*Context Length:131072 - Number of tokens it can injest | |||

*Embedding Length:3072 - Size of the vector for each token in the input text | |||

*Quantization:Q4_K_M - Too complex to explain | |||

You can customize the mode with a Modelfile and running create with ollama. For example | |||

<syntaxhighlight lang="txt"> | |||

FROM llama3.2 | |||

# set the temperature where higher is more creative | |||

PARAMETER temperature 0.3 | |||

SYSTEM """ | |||

You are Bill, a very smart assistant who answers questions succintly and informatively | |||

""" | |||

</syntaxhighlight> | |||

Now we can create a copy with | |||

<syntaxhighlight lang="txt"> | |||

ollama create bill -f ./Modelfile | |||

</syntaxhighlight> | |||

=Rest API Interaction= | |||

So we can send questions to llama using the the rest endpoint to 11434 | |||

<syntaxhighlight lang="bash"> | |||

curl http://192.blah.blah.blah:11434/api/generate -d '{ | |||

"model": "llama3.2", | |||

"prompt": "Why is the sky blue?", | |||

"stream": false | |||

}' | |||

</syntaxhighlight> | |||

We can chat by changing the endpoint and the format by adding format in the playload | |||

<syntaxhighlight lang="bash"> | |||

curl http://192.blah.blah.blah:11434/api/chat -d '{ | |||

"model": "llama3.2", | |||

"prompt": "Why is the sky blue?", | |||

"stream": false, | |||

"format": "json" | |||

}' | |||

</syntaxhighlight> | |||

All of the options are at [https://github.com/ollama/ollama/blob/main/docs/api.md here] | |||

Revision as of 22:14, 31 March 2025

Introduction

This is a page about ollama and you guest it LLM. I have downloaded several models and got a UI going over them locally. The plan is to build something like Claude desktop in Typescript to Golang. First some theory in Python from here. Here is the problem I am trying to solve.

Using the Remote Ollama

You can connect by setting the host with

export OLLAMA_HOST=192.blah.blah.blah

Now you can use with

ollama run llama3.2:latest

Taking lamma3.2b as an example

Model Info

- Architecture:llama Who made it

- Parameters:3.2B - Means 3.2 billion parameters (bigger requires more resources)

- Context Length:131072 - Number of tokens it can injest

- Embedding Length:3072 - Size of the vector for each token in the input text

- Quantization:Q4_K_M - Too complex to explain

You can customize the mode with a Modelfile and running create with ollama. For example

FROM llama3.2

# set the temperature where higher is more creative

PARAMETER temperature 0.3

SYSTEM """

You are Bill, a very smart assistant who answers questions succintly and informatively

"""Now we can create a copy with

ollama create bill -f ./ModelfileRest API Interaction

So we can send questions to llama using the the rest endpoint to 11434

curl http://192.blah.blah.blah:11434/api/generate -d '{

"model": "llama3.2",

"prompt": "Why is the sky blue?",

"stream": false

}'

We can chat by changing the endpoint and the format by adding format in the playload

curl http://192.blah.blah.blah:11434/api/chat -d '{

"model": "llama3.2",

"prompt": "Why is the sky blue?",

"stream": false,

"format": "json"

}'

All of the options are at here